After The US Election, Key People Are Leaving Facebook And Torching The Company In Departure Notes

On Wednesday, a Facebook data scientist departed the social networking company after a two-year stint, leaving a farewell note for their colleagues to ponder. As part of a team focused on “Violence and Incitement,” they had dealt with some of the worst content on Facebook, and they were proud of their work at the company.

Despite this, they said Facebook was simply not doing enough.

“With so many internal forces propping up the production of hateful and violent content, the task of stopping hate and violence on Facebook starts to feel even more sisyphean than it already is,” the employee wrote in their “badge post,” a traditional farewell note for any departing Facebook employee. “It also makes it embarrassing to work here.”

The departing employee declined to speak with BuzzFeed News but asked that they not be named for fear of abuse and reprisal.

Using internal Facebook data and projections to support their points, the data scientist said in their post that roughly 1 of every 1,000 pieces of content — or 5 million of the 5 billion pieces of content posted to the social network daily — violates the company’s rules on hate speech. More stunning, they estimated using the company’s own figures that, even with artificial intelligence and third-party moderators, the company was “deleting less than 5% of all of the hate speech posted to Facebook.” (After this article was published, Facebook VP of integrity Guy Rosen disputed the calculation, saying it "incorrectly compares views and content." The employee addressed this in their post and said it did not change the conclusion.)

The sentiments expressed in the badge post are hardly new. Since May, a number of Facebook employees have quit, saying they were ashamed of the impact the company was having on the world or worried that the company’s inaction in moderating hate and misinformation had led to political interference, division, and bloodshed. Another employee was fired for documenting instances of preferential treatment of influential conservative pages that repeatedly spread false information.

"While we don’t comment on individual employee matters, this is a false characterization of events," Facebook spokesperson Joe Osborne said of this incident.

But in just the past few weeks, at least four people involved in critical integrity work related to reducing violence and incitement, crafting policy to reduce hate speech, and tracking content that breaks Facebook’s rules have left the company. In farewell posts obtained by BuzzFeed News, each person expressed concerns about the company’s approach to handling US political content and hate speech, and called out Facebook leadership for its unwillingness to be more proactive about reducing hate, incitement, and false content.

“We have made massive integrity investments over the past four years, including substantially growing the team that works to keep the platform safe, improving our ability to find and take down hate speech, and protecting the US 2020 elections," said Osborne. "We’re proud of this work and will continue to invest and build on learnings moving forward.”

The departures come as Facebook’s “election integrity” effort is undergoing major changes in the wake of the 2020 US election. The Information recently reported that the company’s civic integrity team — which was charged with "helping to protect the democratic process" and reducing "the spread of viral misinformation and fake accounts" — was recently disbanded as a stand-alone unit. It also reported that a proposal from the company’s integrity teams to throttle the distribution of false and misleading election content from prominent political accounts, like President Donald Trump’s, was shot down by company leadership.

"We continue to have teams and people dedicated to elections work, and will expand on the work of our civic team to other focus areas for the company," Osborne said about changes to the civc integrity team. "Integrity touches everything we do, which is why it’s important to grow and better integrate the teams doing this work across the organization."

As Facebook restructures its integrity team, some employees leaving say they do not have confidence in the company to fix or even temper its problems. While Facebook publicly claims its artificial intelligence measures catch a lot of offending content before it’s reported by users, there have been manifold examples where that system failed, leading to disastrous real-life consequences like a Facebook-organized militia event that coincided with the shooting deaths of two protestors in Kenosha, Wisconsin.

“AI will not save us,” wrote Nick Inzucchi, a civic integrity product designer who quit last week. “The implicit vision guiding most of our integrity work today is one where all human discourse is overseen by perfect, fair, omniscient robots owned by [CEO] Mark Zuckerberg. This is clearly a dystopia, but one so deeply ingrained we hardly notice it any more.”

The departing data scientist expressed similar concerns. “Our current approach to automation is not going to solve most of our integrity problems,” they wrote.

Their post also argued, with data, that Facebook’s “very apparent interest in propping up actors who are fanning the flames of the very fire we are trying to put out” makes it impossible for people to do their jobs. “How is an outsider supposed to believe that we care whatsoever about getting rid of hate speech when it is clear to anyone that we are propping it up?” they asked.

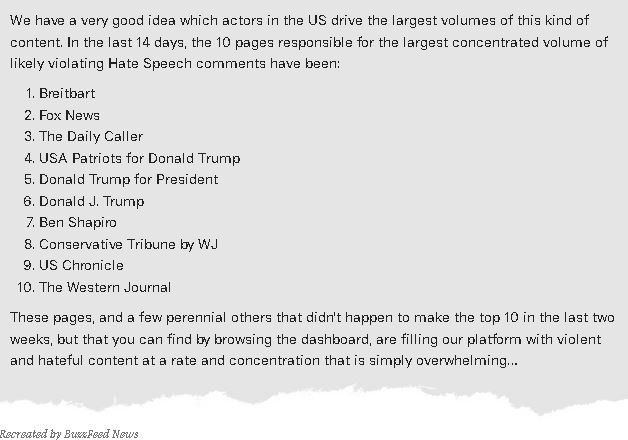

Using data from a Facebook tool called the “Hate Bait dashboard,” which can track content from groups and pages that leads to hateful interactions, the data scientist listed the 10 US pages with the “largest concentrated volume of likely violating Hate Speech comments” in the past 14 days. All were pages associated with conservative outlets or personalities, including Breitbart News, Fox News, the Daily Caller, Donald Trump’s campaign and main account, and Ben Shapiro. They also shared a sample of the hateful comments posted on a recent Breitbart News post about Nancy Pelosi’s support for transgender athletes.

The Hate Bait Dashboard

A Facebook data scientist used the company’s “Hate Bait dashboard” to show the top 10 US pages over a recent period of 14 days that led to the most hateful interactions. BuzzFeed News has recreated the post below:

“I can’t overemphasize that this is a completely average run-of-the-mill post for Breitbart or any of the other top Hate Bait producers,” they wrote, referring to comments that called for the assault or killing of trans individuals.

“They all create dozens or hundreds of posts like this [a] day, each eliciting endless volumes of hateful vile comments—and we reward them fantastically for it,” they wrote, emphasizing their own words.

The data scientist’s comments about preferential treatment of US conservative pages and voices follow other internal concerns and evidence. In November, a departing member of Facebook’s policy organization wrote that “right-leaning US accounts are far more likely than others to engage in hate speech and violence incitement and far more likely to spread misinformation.” The person declined to speak with BuzzFeed News and asked that their name not be published for fear of retaliation.

“Very conservative and conservative users shared more than seven times as much misinformation on the platform in a given period as did moderate, liberal, or very liberal users,” they wrote, linking to a news article of a recent study to support their claim.

Another Facebook core data scientist of more than five years, also quit the company this week. They asked BuzzFeed News not to be named in case of reprisal, but echoed their colleagues' concerns in their farewell post.

“I think Facebook probably has a net negative effect on the quality of political discourse, at least in Western counties,” they wrote. They described a randomized trial that found that “paying people to stop using Facebook for a month caused a decline in negative partisan feelings by around 0.1 [standard deviation].”

The core data scientist also said they had seen “a dozen proposals to measure the objective quality of content on News Feed diluted or killed because … they have a disproportionate impact across the US political spectrum, typically harming conservative content more.” Beyond that, they added that Facebook’s content policy decisions are “routinely influenced by political considerations” to ensure the company “avoids antagonizing powerful political players.”

The other departing data scientist said their team’s incremental changes can improve things on the margin but lack any major impact given Facebook’s scale. For example, they cited a colleague who was able to detect and prevent an additional 500,000 views of English language hate content in the US a day. But they pointed to Trump’s infamous “When the looting starts, the shooting starts” post as an example of how the team’s wins were eclipsed by Facebook’s unwillingness to take action against prominent purveyors of hate speech or violent incitement.

“Trump’s ‘Looting and Shooting’ post was viewed orders of magnitude of times more than the total number of views that we prevent in a day,” they wrote, citing the president’s May post, which was not taken down by the company despite its suggestion that those protesting the police killing of George Floyd be shot. “It’s not hard to draw a straight line from that post to the actual shooting that took place at protests in the months that followed.”

Despite their pointed criticism, the data scientist commended what they said was an improvement of Facebook’s “real-time monitoring,” which was apparently able to prevent “a number of events that might have ended up being similar to Kenosha.” They said the company enacted dozens of interventions that they believe led to a lower-than-expected amount of violence and incitement reports during and following Election Day. For context, the data scientist shared a graph that showed user reports for violence and incitement peaked at around 50,000 in the days after the election, while the top day during the height of protests in the wake of the police killing of George Floyd saw more than 125,000 reports.

BuzzFeed News previously reported the existence of a “violence and incitement trends” metric that jumped 45% over five days in the period after Election Day as misinformation spread about the totaling of votes in the recent presidential election.

The data scientist also offered parting advice to Facebook: “hire more people.” Noting that the company was able to afford it, they suggested doubling the size of employees in the integrity organization. They said it would allow the company to branch out from focusing on US and English-centric content, a concern of another former data scientist.

The four departures and their badge posts triggered a discussion on Facebook’s internal message boards this week, with employees concerned that they could kick off a wider trend. “To what extent are integrity leadership and FB INC leadership hearing and responding to these criticisms?” one person posted to an internal group called “Let’s Fix Facebook.”

Internal survey data from more than 49,000 Facebook employees first reported by BuzzFeed News last month showed that only 51% of respondents said they believed Facebook was having a positive impact on the world. A question about the company’s leadership saw only 56% of employees offer a favorable response.

On Thursday, Zuckerberg detailed some of the turnover and subsequent hiring at the company. In a company-wide meeting, he said he was proud of employees' ability to adapt to the new normal of working from home, particularly for those people who joined recently and have yet to see the inside of a Facebook office.

"I think we now have 20,000 employees who've never been in our office because they joined this year," he said to the more than 50,000 employees at the company. The Facebook chief also said he expects some people to start coming back to offices next year, though the company would not make a COVID-19 vaccine mandatory for returning workers.

As morale inside Facebook plummets, leaders are growing more concerned about employee discussions occurring on the company’s internal forums. Once known for its open culture, Facebook and CEO Zuckerberg, who are staring down landmark antitrust lawsuits from 48 attorneys general and the Federal Trade Commission, repeatedly advised employees this week to avoid discussing anything related to the ongoing litigation given the possibility of legal discovery.

Employees have also been required to take online “Competition Training” courses to understand “Competition Compliance Policy.” The training instructs people who are discussing “complicated issues” to meet in person or over videoconference, while reminding them that others may have access to their communications.

Facebook was quick to shield the departing data scientist’s post from outside eyes. Having initially published their writing in a Google Document to create “an additional layer of security” and prevent it from getting to this news outlet, they were asked by an internal moderator to take the post off Google’s tools.

“Your badge post just got flagged for having confidential information in it,” the moderator wrote to the data scientist.